Why This Matters Now?

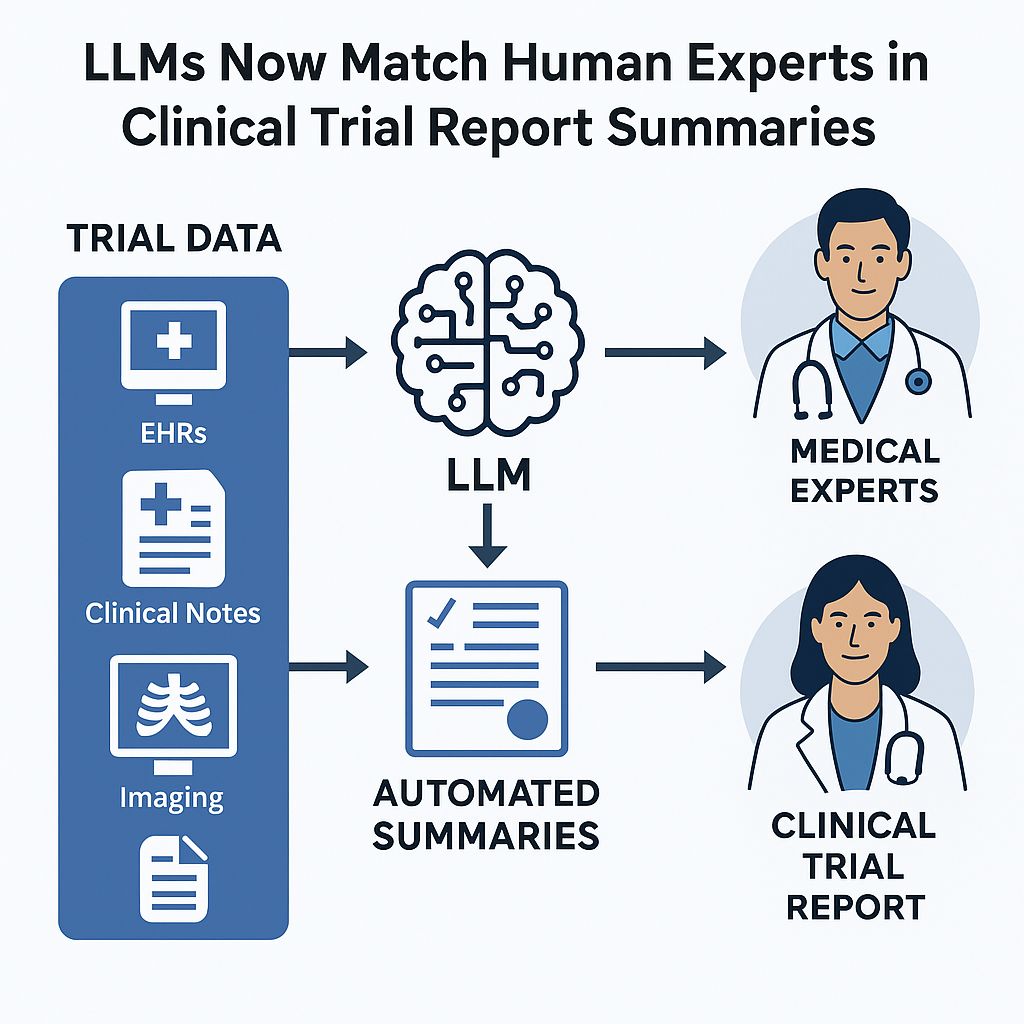

Generating medical reports (trial summaries, safety reports, progression events, etc.) is one of the biggest bottlenecks in biotech and pharmaceutical workflows. It’s costly, slow, manual, and often inconsistent. If LLMs can reliably generate or assist in generating these summaries from trial data or EHRs with high accuracy, the implications are massive: faster regulatory filings, better decision-making, higher throughput of trials, lowering cost, fewer errors, etc.

Context & Big Picture

What happened?

A study presented at the American Association for Cancer Research (AACR, September 2025) demonstrates that large language models (LLMs) can match human experts in identifying critical cancer progression events from electronic health records (EHRs) across 14 cancer types.

Also, other recent work (e.g. “DistillNote”) highlights that LLM-based summarization of clinical notes not only drastically compresses text but improves downstream predictive tasks (e.g. heart failure prediction) significantly.

Broader Trend:

There has been a clear shift from proof-of-concepts toward real task performance parity with human experts (for certain defined tasks). The move is from “can we?” to “should we / how do we deploy at scale?”. Regulators, pharmaceutical companies, clinical research organizations (CROs), and hospital systems are now paying attention not just to performance, but to faithfulness, traceability, style, safety.

Here’s what the AACR cancer progression events study and related research show, explained in relatable, technical, and business-relevant terms:

Analogy to set stage:

Think of a clinical trial summary as a large, complex puzzle: patient histories, lab tests, imaging, adverse event tables, progression events. Traditionally, assembling the puzzle is manual (medical writers, data curators, statisticians). The new research is saying: “What if an LLM could automatically pick up nearly every relevant piece and assemble the puzzle as well as a human — faster and cheaper?”Key findings:

Finding

What was done?

Performance / Outcome

Cancer Progression Event Identification

Using a Claude-based LLM to parse EHR data across 14 cancer types to identify progression events (e.g. when disease advances)

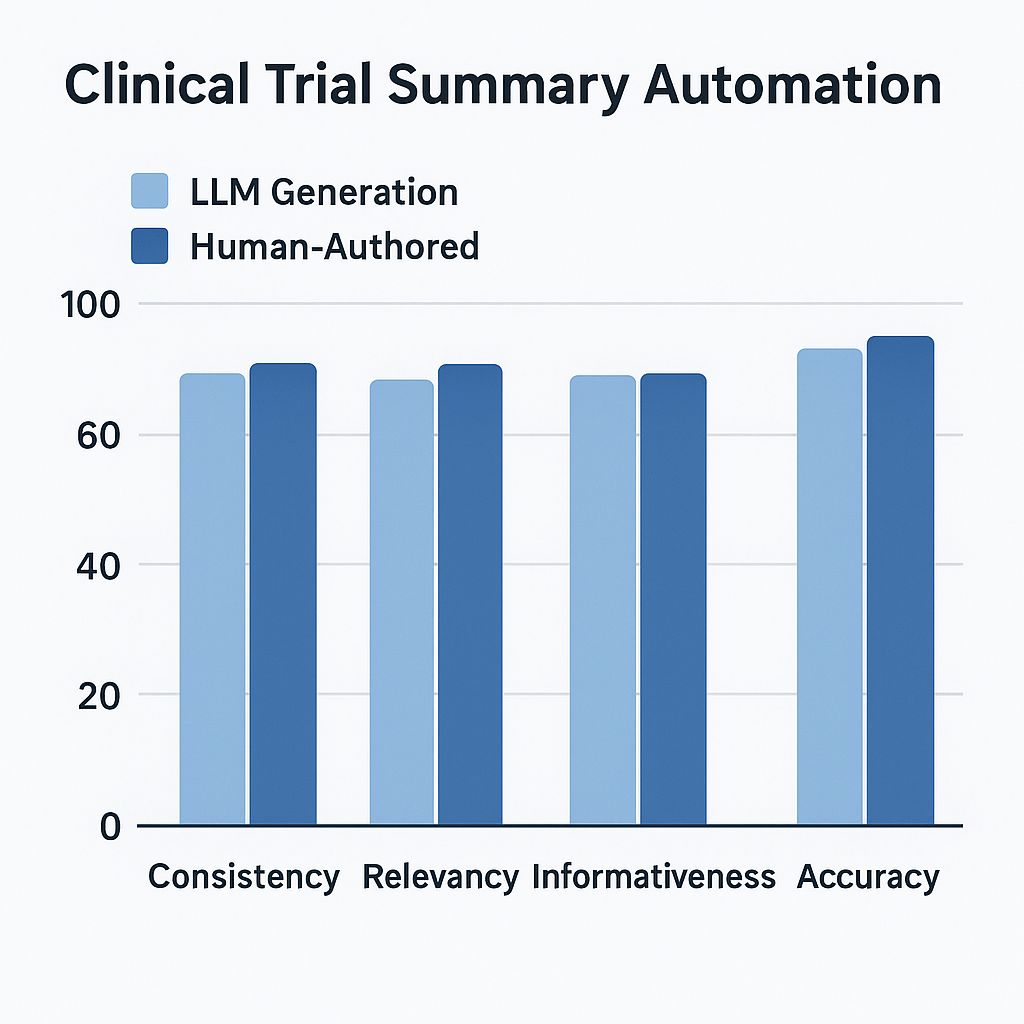

Nearly identical progression-free survival estimates compared to trained human abstractors.

Note Summarization + Diagnostic Value (DistillNote)

Generated summaries of admission notes (heart failure) using LLMs; compared downstream clinical prediction using original vs summaries

Distilled summaries delivered ~79% text compression; up to 18.2% improvement in AUPRC (Area Under Precision-Recall Curve) compared to model trained on full notes.

Why this is significant technically?

These are not just cherry-picked cases; multiple cancer types, real world EHRs, large note corpora.

The summarization isn’t just for readability: it has predictive value (i.e. better clinical signals in downstream tasks).

Compression (fewer tokens / shorter text) with minimal loss (or even gain) of signal = cost‐savings, faster review.

Technical Notes

For engineers, AI leads, architects who want to understand what’s under the hood (but without getting lost in math):

Models / Architecture Used:

Claude-based LLMs (for cancer progression identification) in the AACR presentation.

In DistillNote: LLM summarization + structured / distilled summarization pipelines.

Datasets & Data Types:

EHRs (clinical notes, admission notes)

Longitudinal data (progression events over time)

Imaging / lab / billing codes sometimes incorporated (depending on pipeline)

Metrics & Evaluation:

AUPRC (Area Under Precision-Recall Curve) for predictive accuracy (heart failure example)

Comparing human annotator output vs. LLM output in terms of event detection, progression timelines.

Human evaluations / clinician preference (relevance, actionability, readability).

Challenges / Technical caveats:

Faithfulness / hallucination risk: summarization tasks can leave out context or mis-interpret data if prompts or inputs are noisy.

OOV (Out-of-Vocabulary) medical terms: LLMs perform worse when many rare/unseen terms are present. Vocabulary adaptation helped.

Traceability: knowing which sentence or which note a summary sentence came from is key, especially for compliance.

Recent Related Advances:

TracSum: a benchmark for aspect-based summarization with sentence-level traceability in the medical domain. Helps measure and enforce traceability.

Medical report generation with heterogeneous federated learning: FedMRG model enabling multi-center medical image + report training without sharing raw data.

Actionable Business Insights

For biotech / pharma executives, clinical operations leads, CROs, and health system innovators, here’s what to do with this info:

Strategic Moves:

Pilot LLM-assisted summarization in your clinical trial reporting workflows now. Start with low-risk trials / internal reports to validate accuracy, regulatory acceptability, and cost savings.

Invest in data infrastructure: clean EHR, standardized trial data, labeling, establishing feedback loops with clinicians so LLMs can learn and be audited.

Address trust, compliance, and traceability early: build in mechanisms for verifying summary claims, ensuring every assertion can be traced back to source (e.g. table data, imaging, timestamps).

Risk & Competitive Landscape:

Risks: Hallucinations, regulatory scrutiny, data privacy, variation across centers/datasets, domain drift (model trained on data that doesn’t represent your specific trial population or protocol).

Competitive advantage: Organizations that integrate LLM-based summarization well can cut turnaround times for reports, reduce medical writing costs, accelerate regulatory submission, improve decision agility.

So what? Key Takeaways:

Time and cost savings are no longer theoretical: with compression + maintained (or improved) predictive signal, you get more “signal per token.”

Trust & accuracy are now feasible at scale, especially when using benchmarks like TracSum, or adopting federated/heterogeneous learning (e.g. FedMRG) to respect privacy and variation.

Regulatory compliance isn’t a blocker if you build traceability, human-in-the-loop validation, and documentation.

Case Study Deep Dive: Heart Failure Risk Prediction from Admission Notes (DistillNote)

What was done: DistillNote generated summary versions of admission notes for patients. Summaries were then used as inputs to predictive models (forecasting heart failure).

Impact / ROI:

~79% text compression → reduced reading, storage, and processing cost.

Up to 18.2% improvement in AUPRC compared to using full notes.

Clinicians preferred one-step summaries for relevance and actionability.

Challenges & Ethical Implications:

Possible loss of nuance in rare cases.

Generalization risk across diseases, languages, and note formats.

Need for clinician oversight to ensure safety.

Options for Summaries in Clinical Trial or Report Data

Direct Summarization (LLM):

Strengths: Fastest output, minimal pipeline complexity.

Weaknesses: Risk of omitted detail, hallucinations, inconsistent style.

Best For: Internal reports, early-stage trials, non-regulatory documents.

Effort / Cost: Low to moderate (prompt engineering + fine-tuning).

Structured / Distilled Summarization:

Strengths: More control, strong compression, maintains key signals, reduces overload.

Weaknesses: Requires structured templates, more engineering.

Best For: Prediction tasks, high patient volume use cases.

Effort / Cost: Moderate to high.

Federated / Multi-Center LLM Training (e.g., FedMRG):

Strengths: Protects privacy, handles variability across sites, enables generalization.

Weaknesses: Infrastructure heavy, coordination across sites, higher complexity.

Best For: Large pharma or global trial consortia with sensitive data.

Effort / Cost: High.

Traceability & Aspect-Based Summarization (TracSum):

Strengths: Enables audits, regulatory compliance, and trust.

Weaknesses: Requires annotation and alignment work.

Best For: Safety reporting, regulatory submissions, external oversight.

Effort / Cost: Moderate.

Closing Thoughts

The world is shifting toward summary signal over volume. In medical report generation / trial report writing, the marginal value of every extra sentence is shrinking unless it drives decision or compliance. The organizations that master high-accuracy, traceable, low-latency summarization will gain not just efficiency—they’ll command trust, speed, and regulatory readiness.

If you’re running clinical trials, managing medical writing, or overseeing regulatory submissions, this isn’t about whether to explore LLM-summarization—it’s about how fast you can adopt and internalize it.